Introduction

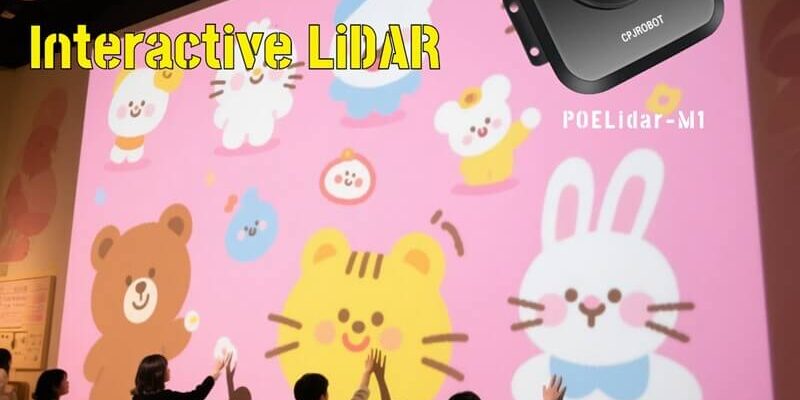

With the rising demand for hygienic and intuitive human-machine interaction, LiDAR multi-point touch systems are emerging as a powerful solution. By detecting subtle hand and finger movements in space, these systems allow users to interact with digital surfaces—without ever making physical contact.

But how does LiDAR detect and track multiple touch points simultaneously? This article explores the core technologies behind the system, from signal emission to 3D coordinate mapping.

1. Signal Emission and Touch Detection

LiDAR devices, mounted on the sides or above a display area, emit a continuous stream of invisible light pulses (laser or near-infrared). These pulses form a sensing layer across the entire interaction zone.

When a user’s finger or hand enters the detection field, it reflects or blocks the light. The LiDAR sensors detect this disturbance and begin calculating the object’s precise location in space.

2. Spatial Localization of Multiple Touch Points

To accurately identify multiple touch points, the system may use one of two setups:

- Multi-plane detection: By installing sensors on two or more planes, the system captures intersecting data to reconstruct the 3D position of each gesture point. This enables precise tracking of movement depth, shape, and location.

- Multi-sensor fusion on a single plane: When arranged on one scanning surface, multiple LiDAR units can collaborate. The overlapping detection areas allow for high-resolution, wide-area coverage, and real-time multi-touch point recognition.

3. Signal Acquisition and High-Speed Processing

The reflected signals are first converted from analog to digital using high-speed A/D converters. This raw data is then processed in real-time through:

- FPGA (Field Programmable Gate Array) for fast, parallel signal filtering and validation

- DSP (Digital Signal Processor) for further computation to determine the 2D or 3D coordinates of each touch point

This high-speed processing chain enables smooth and accurate tracking even in dynamic, multi-user scenarios.

4. Coordinate Mapping and Interaction Feedback

Once coordinates are calculated, they are sent to the host computer, where interactive software maps each point to corresponding areas on a display—whether it’s a projector screen, LED wall, or tiled LCD surface.

Users can then perform non-contact actions such as:

- Clicking or tapping

- Swiping or dragging

- Zooming or rotating (multi-finger gestures)

The system delivers a fluid and natural interactive experience, without the need for physical touchscreens or buttons.

5. Key Advantages of LiDAR Touch Interaction

| Feature | Description |

|---|---|

| Contactless Operation | Enables floating gesture input without direct touch |

| Long-Range Sensing | Supports up to 100 meters between sensor and host via Ethernet |

| True Multi-User Support | Detects multiple users and gestures at the same time |

| Environmental Flexibility | Operates reliably in strong lighting, outdoor settings, or non-flat surfaces |

Conclusion

LiDAR multi-point touch systems use advanced optical sensing and real-time data fusion to unlock a new level of interactive control. Through high-speed analog-to-digital conversion, FPGA/DSP processing, and multi-plane detection, these systems deliver precise, touchless multi-point control—ideal for exhibitions, classrooms, museums, and digital signage.

? About CPJROBOT

CPJROBOT is a leading manufacturer focused on POE LiDAR and intelligent reception service robots. We provide cutting-edge solutions for interactive displays, immersive spaces, and commercial automation.

✅ Plug-and-play POE LiDAR hardware

✅ 100+ free interactive games and gesture templates

✅ Free calibration and control software

✅ Custom OEM/ODM service available

? Ready to Build a Touchless, Interactive Experience?

Whether you need an interactive wall, table, or floor projection system, CPJROBOT’s LiDAR technology empowers you with long-distance, multi-point, non-contact control—built for the future of interaction.

? Contact us today to explore our solutions or request a demo.

Hashtags:

#LiDAR #TouchlessTechnology #MultiTouch #InteractiveProjection #GestureControl #POELiDAR #SmartDisplay #CPJROBOT #HCI #DigitalExperience #ImmersiveTech