Introduction

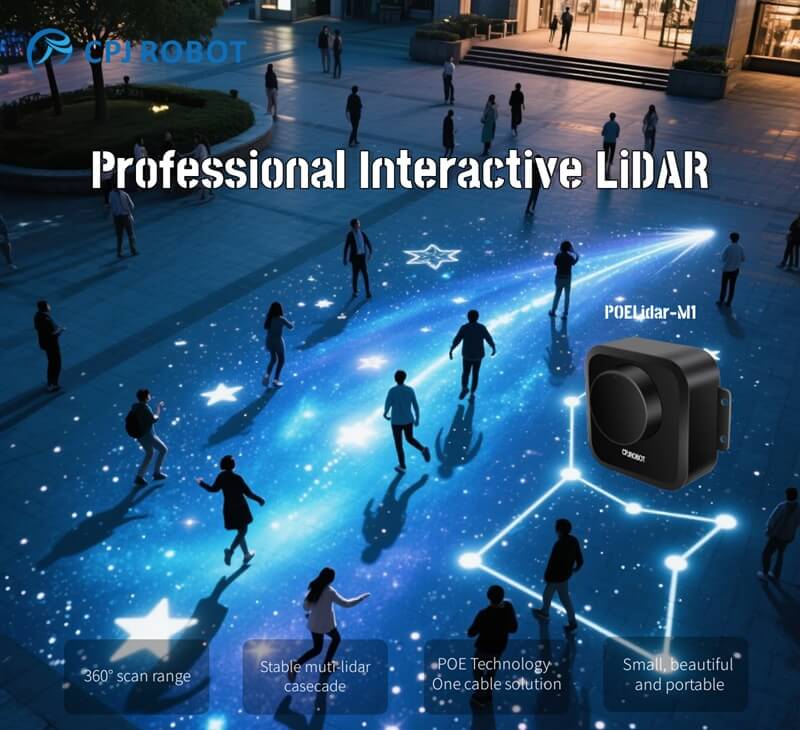

As the demand for immersive, hygienic, and large-scale interactive experiences grows, LiDAR interactive projection systems offer a breakthrough solution: non-contact, multi-point touch interaction from up to 100 meters away.

This article explains how LiDAR sensing technology enables long-range gesture-based control by combining wide-area signal coverage, efficient data transmission, and advanced signal processing—all while ensuring accuracy and stability in complex environments.

1. Wide-Area Signal Coverage for Interaction

LiDAR sensors (e.g., laser-based TOF devices) are installed beside or above a display area such as a projection wall, interactive tabletop, or floor. These devices emit invisible light pulses that form a detection layer across the entire display surface.

When a user moves their hand or body into this space, the LiDAR signal is reflected or interrupted, allowing the sensor to capture motion data with high precision—even across a wide interaction zone.

2. Long-Distance, Lossless Signal Transmission

LiDAR devices are connected to the host computer via Ethernet (LAN). This ensures high-speed, stable communication up to 100 meters, making it easy to conceal the host system in remote locations—a major advantage for museums, exhibitions, and outdoor setups.

Optional USB or serial-to-LAN modules provide additional flexibility, ensuring seamless integration and robust data transmission across various installation scenarios.

3. Real-Time Multi-Point Touch Detection

Captured analog signals are converted into digital form via A/D converters and processed by high-speed FPGA and DSP units. These processors extract motion features, such as:

- Distance and depth using phase-based laser measurement

- Simultaneous tracking of multiple fingers or users

- Non-contact gesture recognition with high accuracy and low latency

This allows for real-time identification of multiple interactive points without physical touch.

4. Intelligent Software for Gesture Control

The host PC runs specialized LiDAR interaction software, which receives and processes coordinate data from the sensor. It renders visual responses such as clicks, drags, or object movements based on user gestures.

Built-in support for multi-user and multi-point gestures ensures smooth operation—even under strong ambient light or on irregular surfaces like curved walls or water.

5. System Architecture and Application Scenarios

| Feature | Description |

|---|---|

| Signal Coverage | Invisible sensing layer across the display area for touchless gesture control |

| Long-Distance Connectivity | Up to 100m Ethernet connection between LiDAR sensor and host computer |

| Multi-Point Detection | Real-time FPGA/DSP processing with accurate tracking of multiple gestures |

| Software Compatibility | Touchless UI software enables drag, click, multi-user interaction |

| Ideal Use Cases | Exhibition halls, outdoor environments, curved or reflective surfaces |

Conclusion

LiDAR interactive projection unlocks the power of contactless, long-range multi-point interaction using advanced laser sensing and robust system architecture. With high precision, low latency, and wide coverage, it’s a future-ready solution for interactive exhibitions, smart signage, outdoor displays, and immersive experiences.

? Brought to You by CPJROBOT

CPJROBOT is a leading innovator in the field of POE LiDAR and reception service robots. We specialize in delivering professional-grade LiDAR systems for interactive applications, supported by:

✅ Free calibration and interaction software

✅ 100+ ready-to-use interactive games

✅ Customization and OEM/ODM services

✅ Plug-and-play Ethernet (POE) support

? Ready to Bring Your Walls, Floors, or Tables to Life?

Contact CPJROBOT today and experience the next generation of touchless, interactive projection—from short-range setups to 100-meter installations in real-world environments.

#LiDAR #InteractiveProjection #TouchlessControl #POELiDAR #GestureRecognition #MultiTouch #CPJROBOT #SmartExhibition #HumanMachineInteraction #FutureTech #ImmersiveExperience