Introduction

In the era of touchless technology, natural human-computer interaction (HCI) is more important than ever. From smart homes to public displays and robotics, users expect intuitive interfaces that respond to nuanced gestures.

LiDAR-based gesture recognition systems are revolutionizing the way we interact—by replacing physical touch with invisible precision. This article explains how advanced LiDAR technology, paired with deep learning, captures complex hand gestures and enhances the natural feel of touchless interaction.

Key Mechanisms Behind Complex Gesture Recognition with LiDAR

1. Multi-Dimensional Feature Extraction

LiDAR sensors emit and receive electromagnetic waves to capture high-resolution spatial and temporal data. Each gesture is broken down into:

- Distance (range from the sensor)

- Velocity (via Doppler effect)

- Azimuth and elevation angles

Together, these features form a rich temporal signature of the user’s hand motion, allowing precise tracking in 3D space.

2. High-Resolution Range-Doppler Imaging

Using signal processing techniques like Fast Fourier Transform (FFT), LiDAR systems convert reflected signals into 2D Range-Doppler (RD) images that represent:

- Distance-time relationships

- Velocity changes across movement frames

- Fine-grained motion details

This enables recognition of even subtle and complex gestures, such as finger pinches or curved hand swipes.

3. Deep Neural Network-Based Modeling

To interpret complex gestures, CPJROBOT LiDAR systems use:

- 1D-ScNN for temporal feature extraction

- CNN + LSTM hybrids to model time-sequence relationships

- Multi-layer classifiers to label gestures with >96% accuracy

These models can differentiate between gestures like swipe, wave, push, rotate, or zoom with high confidence and low latency.

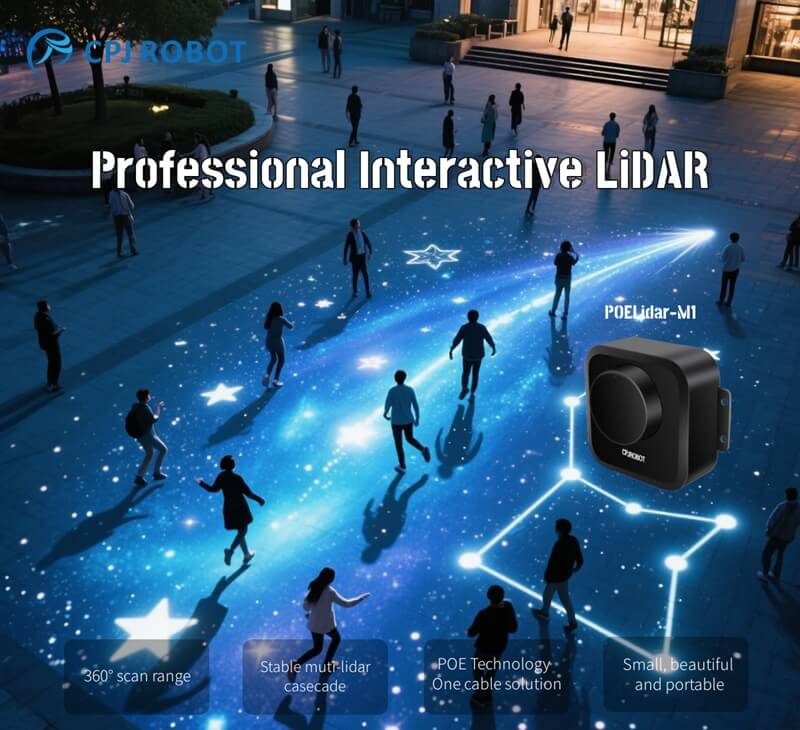

4. Multi-View Fusion and Multi-Task Learning

By cascading multiple LiDAR sensors, data from different viewing angles can be fused. Multi-task learning frameworks allow the system to:

- Handle multi-dimensional inputs simultaneously

- Fuse spatial and temporal signals

- Improve robustness under occlusion or complex lighting

This leads to more resilient and accurate gesture recognition, especially in dynamic or public environments.

5. Smart Data Augmentation & Attention Mechanisms

To address challenges like feature ambiguity and low semantic clarity, LiDAR gesture systems incorporate:

- Autoencoders for enhanced feature generation

- Attention modules to prioritize key motion patterns

- Noise filtering to focus on relevant gesture data

The result: higher precision in identifying fine-grained or overlapping gestures.

6. Temporal Sequence Modeling

Time-aware models such as LSTM (Long Short-Term Memory) networks leverage:

- Frame-by-frame gesture evolution

- Inter-frame correlations

- Pattern learning over time

This enables the system to understand continuous gestures—not just static positions—making interaction feel smooth and natural.

7. Interference Immunity and Privacy Protection

LiDAR operates independently of ambient light and does not rely on optical imaging, which ensures:

- Excellent performance in dark, bright, or cluttered environments

- Resistance to visual occlusion

- Complete privacy protection, as no actual video is captured

Perfect for public spaces, healthcare, or educational settings where hygiene and privacy are essential.

Summary Table: Why LiDAR Is Ideal for Natural Gesture Interaction

| Capability | Description |

|---|---|

| Multi-Dimensional Data Capture | Captures range, Doppler, and angular motion data |

| RD Imaging | Visualizes hand motion in velocity and distance domains |

| Deep Learning Recognition | Recognizes complex gestures with >96% accuracy |

| Multi-Angle Fusion | Combines data from multiple sensors for 3D accuracy |

| Real-Time Sequence Analysis | Understands gestures over time with LSTM models |

| Attention-Based Enhancement | Highlights relevant gesture features for better classification |

| Light & Privacy Resilience | Works in any lighting condition and ensures user privacy |

Powered by CPJROBOT

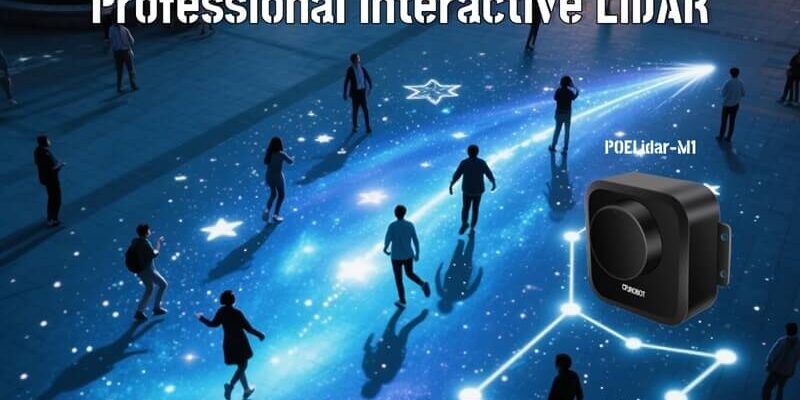

CPJROBOT is a pioneer in LiDAR-powered interaction systems and intelligent service robots. Our gesture recognition solutions are designed for:

- Smart retail and customer service terminals

- Interactive museum or exhibition installations

- Autonomous robots and public kiosks

- Education and healthcare interaction platforms

Ready to Make Gestures Your Next Interface?

Say goodbye to physical buttons and static screens. With CPJROBOT’s LiDAR gesture solutions, your applications become responsive, intelligent, and intuitive—driven by the most natural input system of all: the human hand.

Contact us now to book a live demo or discuss your custom LiDAR-based interaction project.

Hashtags:

#LiDAR #GestureRecognition #TouchlessTechnology #HumanComputerInteraction #CPJROBOT #SmartInterface #DeepLearning #AIInteraction #NonContactUI #HCI #POELiDAR #GestureControl #PublicInteraction