Live performances and concerts are no longer just about sound and visuals—they are increasingly about real-time audience interaction.

PoE Interactive LiDAR has emerged as one of the most reliable technologies for creating contactless, multi-user, large-scale interaction across stages, floors, walls, and LED screens.

From interactive music control to crowd-driven lighting effects, LiDAR enables performers and audiences to shape the show together.

Why PoE Interactive LiDAR Fits Live Stage Environments

Concert stages present extreme technical conditions: intense lighting, fast motion, smoke, reflections, and unpredictable audience behavior.

LiDAR excels in these environments because it detects distance and movement, not color or brightness, making it far more stable than camera-based systems.

Combined with PoE power and Ethernet data, LiDAR systems are also easier to deploy in complex stage structures.

Types of Stage Effects Enabled by LiDAR Interaction

Interactive LED Screens and Stage Walls

Large LED screens or side walls can be transformed into touch-responsive visual interfaces.

- Hand gestures or body proximity trigger:

- Visual effects

- Lyrics highlights

- Sound samples or musical layers

- Multiple people can interact simultaneously, creating:

- Particle storms

- Audio-reactive waves

- Crowd-driven visual patterns

This approach allows entire audience sections to participate, rather than limiting interaction to a single performer.

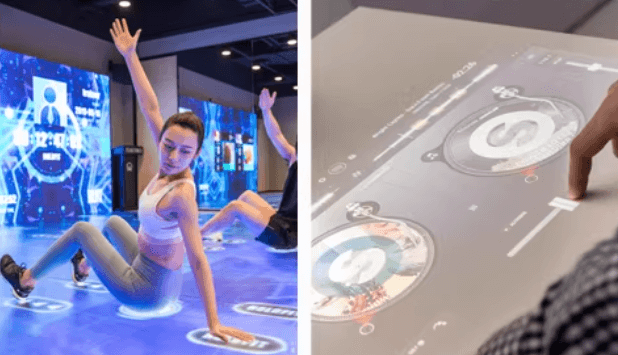

Interactive Floors and Runways

LiDAR works especially well for interactive stage floors and extended runways.

- Projected visuals such as:

- Water ripples

- Flames

- Rhythm tiles

- When a singer or dancer steps into a zone, the system triggers:

- Beats and rhythm layers

- Lighting cues

- Screen effects

These installations are also effective for:

- Opening warm-up segments

- Mid-show audience interaction games

- Dance-driven performances

Crowd-Driven Lighting and Sound Control

One of the most powerful applications of PoE Interactive LiDAR is real-time crowd analysis.

By tracking:

- Hand-waving height

- Movement density

- Jumping or collective motion

LiDAR data can be mapped to:

- Lighting intensity and sweep speed

- Visual wave amplitude

- Audio energy or effect layers

This enables a true “audience-driven performance”, where the crowd directly influences the stage atmosphere.

Key Advantages of PoE Interactive LiDAR for Concerts

Multi-User, Multi-Point, Large-Area Coverage

A single LiDAR unit can cover several to tens of meters, tracking multiple performers or audience members at once.

Compared to pressure floors or infrared curtains:

- No dense cabling is required

- Users can move freely

- Interaction is not limited to fixed trigger points

This makes LiDAR ideal for:

- Front-of-stage interaction zones

- Extended runways

- VIP or audience participation areas

Strong Resistance to Stage Lighting and Effects

Concert environments include:

- High-brightness LED walls

- Moving lights and strobes

- Smoke, haze, and fog

Camera-based systems often fail under these conditions.

LiDAR, by contrast, remains stable because it relies on time-of-flight distance measurement, not visible light.

Flexible Installation with PoE Power

PoE (Power over Ethernet) dramatically simplifies stage deployment:

- One cable for power and data

- Easy mounting on:

- Lighting trusses

- Stage ceilings

- Side walls

- Above audience areas

- Multiple LiDAR units can be networked and merged in software to cover:

- Wide stages

- Circular or immersive stage layouts

This reduces setup time and improves reliability during tours and temporary installations.

Design Considerations for LiDAR Stage Interaction

Coverage Planning and Viewing Angle

Before installation, clearly define the interaction area:

- Main stage

- Extended runway

- Audience sections

- Side or rear LED walls

LiDAR sensors are typically installed:

- Overhead or on trusses

- At a downward or angled-down view

This minimizes occlusion and eliminates dead zones caused by people blocking each other.

Latency and Audio-Visual Synchronization

For music-driven interaction, system latency must be tightly controlled.

The full signal chain usually includes:

- LiDAR sensing

- Middleware processing

- Media servers or audio engines

- Lighting and video consoles

With proper optimization, end-to-end latency can be kept within tens of milliseconds, ensuring that:

- Visuals stay on beat

- Lighting cues feel immediate

- Performers trust the interaction system

Integration with Existing Stage Control Systems

LiDAR interaction data can be translated into standard stage protocols, including:

- OSC / MIDI for music software and live instruments

- Art-Net / sACN / DMX for lighting consoles

- Custom network messages for media servers such as:

- Resolume

- Watchout

- JustAddMusic

This allows LiDAR to fit seamlessly into professional stage workflows.

Practical Use Case Examples

Crowd Energy During a Chorus

During a song’s climax:

- LiDAR measures crowd hand-waving height and density

- Data drives:

- LED wave height

- Lighting sweep speed

- Visual intensity

The result is a feedback loop where the more engaged the audience becomes, the more powerful the stage effects feel.

Interactive Song Segment

In a dedicated interactive track:

- Selected audience members step onto a LiDAR-enabled stage area

- Each position corresponds to:

- A rhythm layer

- A harmony track

- A sound effect

As participants move, they collectively create a live “audience orchestra,” blending performance and interaction.

Conclusion: LiDAR as a New Instrument for Live Performance

PoE Interactive LiDAR transforms stages into responsive instruments rather than static platforms.

With its ability to handle large spaces, multiple users, intense lighting, and real-time control, LiDAR is becoming a core technology for:

- Concerts

- Music festivals

- Touring shows

- Immersive stage installations

For creators looking to push beyond passive visuals and into true audience-driven performance, LiDAR is no longer experimental—it is production-ready.